Pioneering Algorithmic Robotics and Physics-Aware Autonomy

PRACSYS is a research lab focused on autonomy that holds up when the world pushes back—through contact, uncertainty, and real hardware constraints.

Rigorous Algorithms for a Physical World

Most autonomy failures I've seen don't come from a missing feature; they come from a missing assumption. A planner that looks elegant on a whiteboard can fall apart the first time friction changes, a cable snags, or a sensor drops a frame.

Our work starts with algorithmic rigor—formal problem statements, explicit constraints, and careful accounting of uncertainty—then we pressure-test those ideas against the physics that actually governs robots and biomedical systems. We care about what happens when the robot touches the environment, not just when it moves through free space.

Field note: When a system behaves "mysteriously," it's often because the model quietly assumed perfect actuation or noiseless state estimation. We try to surface those assumptions early, while the math is still editable.

Collaborative Intelligence

Autonomy is rarely a solo act. Robots share space with people, other robots, and clinical workflows that don't pause for a replan.

We study collaboration as a technical problem: how to represent intent, how to negotiate constraints, and how to keep decision-making stable when multiple agents adapt at once. The goal is not "human in the loop" as a slogan, but concrete interfaces and algorithms that make shared control predictable under time pressure.

Here, the hard part is often coordination latency and partial observability, not raw compute.

Core Research Vectors

PRACSYS sits at the intersection of motion planning, autonomous systems, and computational biomedicine. We keep the scope tight: methods that can be implemented, measured, and iterated on real platforms.

Algorithmic robotics & motion planning

Sampling-based planning, optimization, and hybrid approaches—especially where constraints are nontrivial (contacts, narrow passages, dynamic feasibility). We treat planning as a system component that must behave well under imperfect state and bounded compute.

Physics-aware autonomy

Models that respect dynamics and interaction forces, paired with controllers that can tolerate mismatch. If the only way a method works is by tuning a simulator until it agrees, we consider that a warning sign.

Computational biomedicine

We translate autonomy ideas into biomedical settings where constraints are stricter and data can be messy. The emphasis is on reproducible pipelines and clinically meaningful failure modes, not just aggregate accuracy.

Verification-minded evaluation

We design experiments that expose edge cases: contact transitions, sensor dropouts, and adversarial geometry. A method that looks "optimal" on average can still be brittle in the tails.

Supported by Federal Innovation

Parts of our research program have been supported through federal funding mechanisms aimed at high-risk, high-reward autonomy and biomedical computation. That support matters because it lets us run longer experimental cycles and publish negative results when they teach something real.

Context: Our federally supported work has included multi-year research efforts with defined deliverables and open dissemination expectations, which shapes how we document methods and evaluation protocols.

The Challenge of the Sim2Real Gap

Simulation is useful, but it is also persuasive in the wrong way. It can make a fragile method look stable because the world is too clean.

We treat sim2real as an engineering constraint: identify which parameters matter, measure them on hardware, and decide what must be learned versus what must be modeled. For contact-rich tasks, small errors in friction, compliance, or timing can dominate—so we design tests that isolate those effects instead of averaging them away.

Some gaps are irreducible in the short term (for example, unmodeled wear or temperature-dependent behavior), so we focus on robustness where it buys the most safety and repeatability.

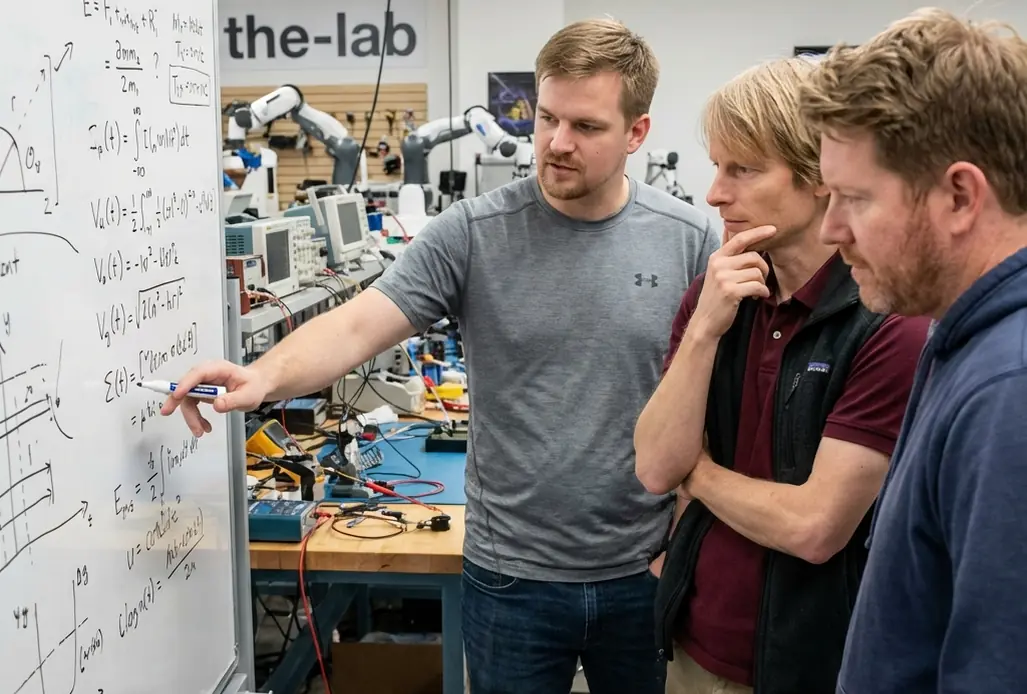

Experimental Infrastructure

We build and maintain experimental setups that force algorithms to confront reality: calibration drift, imperfect sensing, and the occasional mechanical surprise. That infrastructure is not a backdrop; it's part of the methodology.

Our lab workflow is simple: implement the method, define failure modes before running, and keep logs that let us replay decisions step-by-step. When a result looks too clean, we rerun it with a different seed, a different surface, or a slightly different payload. The point is to learn what the algorithm is actually using.

Interested in collaborating, visiting, or discussing a research fit? Reach out with a short description of the problem and the constraints you care about.

Contact PRACSYS